Learn more about the course

Get details on syllabus, projects, tools, and more

PGP in Artificial Intelligence & Machine Learning: Business Applications

Master AI applications and secure a future-ready career

Application closes 19th Feb 2026

Program Outcomes

Elevate your career with advanced AI skills

Become an AI & Machine Learning expert

-

Lead AI innovation by mastering core AI & ML concepts & technologies

-

Build AI applications with GenAI, NLP, computer vision, predictive analytics, and recommendation systems

-

Build an impressive, industry-ready portfolio with hands-on projects.

-

Earn a bonus certificate in Python Foundations to strengthen your skills

Earn a certificate of completion

Key program highlights

Why choose the AI & ML program

-

Learn from world’s top university

Earn a certificate from a world-renowned university, taught by top Faculty

-

Industry-ready curriculum

Learn the foundations of Python, GenAI, and Deep Learning, gain valuable insights, and apply your skills to transition into AI roles

-

Learn at your convenience

Gain access to 200+ hours of content online, including lectures, assignments, and live webinars which you can access anytime, anywhere

-

7 hands-on projects & 20+ tools

Build projects made using data from top companies like Uber, Netflix, and Amazon and get hands-on training with projects and case studies

-

Get expert mentorship

Interact with mentors who are experts in AI and get guidance to complete and showcase your projects

-

Personalized program support

Get 1:1 personal assistance from a Program Manager to complete your course with ease.

Skills you will learn

Programming Fundamentals

Machine Learning

Computer Vision

Generative AI

Foundational Skills Certification

Problem-Solving Skills

Portfolio Development

Deep Learning

Natural Language Processing

AI Applications

Programming Fundamentals

Machine Learning

Computer Vision

Generative AI

Foundational Skills Certification

Problem-Solving Skills

Portfolio Development

Deep Learning

Natural Language Processing

AI Applications

view more

Secure top AI & machine learning jobs

-

$15 trillion

AI net worth by 2030

-

$118 billion

AI industry revenue

-

Up to $ 150K

Avg annual salary

-

97 million

new jobs by 2025

Careers in AI & ML

Here are the ideal job roles in AI sought after by companies in India

-

AI Engineer

-

Machine Learning Engineer

-

AI Research Scientist

-

Prompt Engineer

-

Big Data Engineer

-

NLP Engineer

-

Deep Learning Engineer

-

Business Intelligence Developer

-

Compute Vision Engineer

-

AI Consultant

Our alumni work at top companies

- Overview

- Career Transitions

- Why GL

- Learning Journey

- Curriculum

- Projects

- Tools

- Certificate

- Faculty

- Mentors

- Reviews

- Career support

- Fees

- FAQ

This program is ideal for

The PG program in AI & ML empowers you to align your learning with your professional aspirations

View Batch Profile

-

Young professionals

Kickstart your career in AI with foundational & advanced skills , real-world projects, and industry insights to ease into new roles

-

Mid-senior professionals

Advance to senior roles with leadership learning, practical experience, and advanced AI/ML concepts

-

Project Managers

Effectively manage AI/ML projects from implementation to deployment with expertise in tools, methodologies, and best practices

-

Tech Leaders

Lead AI innovation with strategic insights, advanced AI & ML skills, and the ability to drive business transformation

Experience a unique learning journey

Our pedagogy is designed to ensure career growth and transformation

-

Learn with self-paced videos

Learn critical concepts from video lectures by faculty & AI experts

-

Engage with your mentors

Clarify your doubts and gain practical skills during the weekend mentorship sessions

-

Work on hands-on projects

Work on projects to apply the concepts & tools learnt in the module

-

Get personalized assistance

Our dedicated program managers will support you whenever you need

Syllabus designed for professionals

Designed by the faculty at the University of Texas at Austin

-

200+ hours

learning content

-

20+

languages & tools

-

40+

case studies

Foundations

The Foundations module comprises two courses where we get our hands dirty with Python programming language for Artificial Intelligence and Machine Learning and Statistical Learning, head-on. These two courses set our foundations for Artificial Intelligence and Machine Learning online course so that we sail through the rest of the journey with minimal hindrance. Welcome to the program.

Self-paced Module: Introduction to Data Science and AI

Gain an understanding of the evolution of AI and Data Science over time, their application in industries, the mathematics and statistics behind them, and an overview of the life cycle of building data driven solution.

- The fascinating history of Data Science and AI

- Transforming Industries through Data Science and AI

- The Math and Stats underlying the technology

- Navigating the Data Science and AI Lifecycle

Self-Paced Module: Python Pre-Work

This course provides you with a fundamental understanding of the basics of Python programming and builds a strong foundation of the basics of coding to build AI and Machine Learning (ML) applications

- Introduction to Python Programming

- AI Application Case Study

Module 1: Python Foundations

Python is an essential programming language in the tool-kit of an AI & ML professional. In this course, you will learn the essentials of Python and its packages for data analysis and computing, including NumPy, SciPy, Pandas, Seaborn and Matplotlib.

- Python Programming Fundamentals

Python is a widely used high-level, interpreted programming language, having a simple, easy-to-learn syntax that highlights code readability.

This module will teach you how to work with Python syntax to executing your first code using essential Python fundamentals

- Python for Data Science - NumPy and Pandas

NumPy is a Python package for scientific computing like working with arrays, such as multidimensional array objects, derived objects (like masked arrays and matrices), etc. Pandas is a fast, powerful, flexible, and simple-to-use open-source library in Python to analyse and manipulate data.

This module will give you a deep understanding of exploring data sets using Pandas and NumPy.

- Exploratory Data Analysis

Exploratory Data Analysis, or EDA, is essentially a type of storytelling for statisticians. It allows us to uncover patterns and insights, often with visual methods, within data.

This module will give you a deep insight into EDA in Python and visualization tools-Matplotlib and Seaborn.

- Data Pre-processing

Data preprocessing is a crucial step in any machine learning project and involves cleaning, transforming, and organizing raw data to improve its quality and usability. The preprocessed data is used both analysis and modeling.

- Analyzing Text Data

Text data is one of the most common forms of data and analyzing it plays a crucial role in extracting valuable insights from unstructured information in human language. This module covers different text processing and vectorization techniques to efficiently extract information from raw textual data.

Self-paced Module: Statistical Learning

Statistical Learning is a branch of applied statistics that deals with Machine Learning, emphasizing statistical models and assessment of uncertainty. This course on statistics will work as a foundation for Artificial Intelligence and Machine Learning concepts learnt in this AI ML PG program.

- Descriptive Statistics

The study of data analysis by describing and summarising numerous data sets is called Descriptive Analysis. It can either be a sample of a region’s population or the marks achieved by 50 students.

This module will help you understand Descriptive Statistics in Python for AI ML. - Inferential Statistics

Inferential Statistics helps you how to use data for estimation and assess theories. You will know how to work with Inferential Statistics using Python. - Probability & Conditional Probability

Probability is a mathematical tool used to study randomness, like the possibility of an event occurring in a random experiment. Conditional Probability is the likelihood of an event occurring provided that several other events have also occurred.

In this module, you will learn about Probability and Conditional Probability in Python for AI ML. - Hypothesis Testing

Hypothesis Testing is a necessary Statistical Learning procedure for doing experiments based on the observed/surveyed data.

You will learn Hypothesis Testing used for AI and ML in this module. - Chi-square & ANOVA

Chi-Square is a Hypothesis testing method used in Statistics, where you can measure how a model compares to actual observed/surveyed data.

Analysis of Variance, also known as ANOVA, is a statistical technique used in AI and ML. You can split observed variance data into numerous components for additional analysis and tests using ANOVA.

This module will teach you how to identify the significant differences between the means of two or more groups.

Machine Learning

The next module is the Machine Learning online course, where you will learn Machine Learning techniques and all the algorithms popularly used in Classical ML that fall in each category.

Module 2: Machine Learning

In this module, understand the concept of learning from data, build linear and non-linear models to capture the relationships between attributes and a known outcome, and discover patterns and segment data with no labels.

Supervised Machine Learning aims to build a model that makes predictions based on evidence in the presence of uncertainty. In this course, you will learn about Supervised Learning algorithms of Linear Regression and Logistic Regression.

- Linear Regression

Linear Regression is one of the most popular supervised ML algorithms used for predictive analysis, resulting in producing the best outcomes. You can use this technique to assume a linear relationship between the independent variable and the dependent variable. You will cover all the concepts of Linear Regression in this module.

- Decision Trees

A decision tree is a Supervised ML algorithm, which is used for both classification and regression problems. It is a hierarchical structure where internal nodes indicate the dataset features, branches represent the decision rules, and each leaf node indicates the result.

Unsupervised Learning finds hidden patterns or intrinsic structures in data. In this machine learning online course, you will learn about commonly-used clustering techniques like K-Means Clustering and Hierarchical Clustering along with Dimension Reduction techniques like Principal Component Analysis.

- K-Means Clustering

K-means clustering is a popular unsupervised ML algorithm, which is used for resolving the clustering problems in Machine Learning. In this module, you will learn how the algorithm works and later implement it. This module will teach you the working of the algorithm and its implementation.

Module 3: Advanced Machine Learning

Ensemble methods help to improve the predictive performance of Machine Learning models. In this machine learning online course, you will learn about different Ensemble methods that combine several Machine Learning techniques into one predictive model in order to decrease variance, bias or improve predictions.

- Bagging and Random Forests

In this module, you will learn Random Forest, a popular supervised ML algorithm that comprises several decision trees on the provided several subsets of datasets and calculates the average for enhancing the predictive accuracy of the dataset, and Bagging, an essential Ensemble Method.

- Boosting

Boosting is an Ensemble Method which can enhance the stability and accuracy of machine learning algorithms, converting them into robust classification, etc.

- Model Tuning

Model tuning is a crucial step in developing ML models and focuses on improving the performance of a model using different techniques like feature engineering, imbalance handling, regularization, and hyperparameter tuning to tweak the data and the model. This module covers the different techniques to tune the performance of an ML model to make it robust and generalized.

Artificial Intelligence & Deep Learning

The AI and Deep Learning course will take us beyond the traditional ML into the realm of Neural Networks. From the regular tabular data, we move on to training our models with unstructured data like Text and Images.

Module 4: Introduction to Neural Networks

In this module, implement neural networks to synthesize knowledge from data, demonstrate an understanding of different optimization algorithms and regularization techniques, and evaluate the factors that contribute to improving performance to build generalized and robust neural network models to solve business problems.

- Deep Learning and its history

Deep Learning carries out the Machine Learning process using an ‘Artificial Neural Net’, which is composed of several levels arranged in a hierarchy. It has a rich history that can be traced back to the 1940s, but significant advancements occurred in the 2000s with the introduction of deep neural networks and the availability of large datasets and computational power.

- Multi-layer Perceptron

The multilayer perceptron (MLP) is a type of artificial neural network with multiple layers of interconnected neurons, including an input layer, one or more hidden layers, and an output layer. It is a versatile architecture capable of learning complex patterns from data.

- Activation functions

Activation Function is used for defining the output of a neural network from numerous inputs.

- Backpropagation

Backpropagation is a key algorithm used in training artificial neural networks, enabling the calculation of gradients and the adjustment of weights and biases to iteratively improve the performance of a neural network.

- Optimizers and its types

Optimizers are algorithms used to adjust the parameters of a neural network model during training to minimize the loss function. Different types of optimizers are Gradient Descent, RMSProp, Adam, etc.

- Weight Initialization and Regularization

Weight initialization is the process of setting initial values for the weights of a neural network, which can significantly impact the model's training and convergence. Regularization is a technique used in machine learning/ neural networks to prevent the model from overfitting, which helps improve the model's generalization ability.

Module 5: Natural Language Processing with Generative AI

- Word Embeddings

- Attention Mechanism and Transformers

- Large Language Models and Prompt Engineering

- Retrieval Augmented Generation

Module 6: Introduction to Computer Vision

This course will introduce you to the world of computer vision, demonstrate an understanding of image processing and different methods to extract informative features from images, build convolutional neural networks (CNNs) to unearth hidden patterns in image data, and leverage common CNN architectures to solve image classification problems.

- Image Processing

Computer Vision is a branch of AI that focuses on understanding and extracting meaningful insights from image data. This module provides an overview of the world of computer vision and covers techniques to process images and extract meaningful patterns from them.

- Convolutional Neural Networks

Given the complex nature of image data, convolutional neural networks (CNNs) are utilized to capture relevant spatial information in images. Transfer learning is a method to leverage the underlying knowledge needed to solve one problem to other problems. This module will cover the fundamentals of CNNs, how to build them from scratch, and how to leverage common CNN architectures via transfer learning to solve different image classification problems

Module 7: Model Deployment

This course will help you comprehend the role of model deployment in realizing the value of an ML model and how to build and deploy an application using Python.

- Introduction to Model Deployment

- Containerization

Self-paced Module: Generative AI

Get an overview of Generative AI, what ChatGPT is and how it works. delve into the business applications of ChatGPT, and an overview of other generative AI models/tools via demonstrations.

- ChatGPT and Generative AI - Overview

- ChatGPT - Applications and Business

- Breaking Down ChatGPT

- Limitations and Beyond ChatGPT

- Generative AI Demonstrations

Self-paced Module: Recommendation Systems

The last module in this Artificial Intelligence and Machine Learning online course is Recommendation Systems. A large number of companies use recommender systems, which are software that select products to recommend to individual customers. In this course, you will learn how to produce successful recommender systems that use past product purchase and satisfaction data to make high-quality personalized recommendations.

- Popularity-based Model

A popularity-based model is a recommendation system, which operates based on popularity or any currently trending models. - Market Basket Analysis

Market Basket Analysis, also called Affinity Analysis, is a modeling technique based on the theory that if you purchase a specific group of items, then you are more probable to buy another group of items. - Content-based Model

First, we accumulate the data explicitly or implicitly from the user. Next, we create a user profile dependent on this data, which is later used for user suggestions. The user gives us more information or takes more recommendation-based actions, which subsequently enhances the accuracy of the system. This technique is called a Content-based Recommendation System. - Collaborative Filtering

Collaborative Filtering is a collective usage of algorithms where there are numerous strategies for identifying similar users or items to suggest the best recommendations. - Hybrid Recommendation Systems

A Hybrid Recommendation system is a combination of numerous classification models and clustering techniques. This module will lecture you on how to work with a Hybrid Recommendation system.

Self-paced Module: Multimodal Generative AI

This course will help you gain an understanding of the core concepts of databases and SQL, gain practical experience writing simple SQL queries to filter, manipulate, and retrieve data from relational databases, and utilize complex SQL queries with joins, window functions, and subqueries for data extraction and manipulation to solve real-world data problems and extract actionable business insights.

- Introduction to DB and SQL

- Fetching, Filtering, and Aggregating Data

- Inbuilt and Window Functions

- Joins and Subqueries

Self-paced Module: Introduction to SQL

- Introduction to DB and SQL

- Fetching, Filtering, and Aggregating Data

- Inbuilt and Window Functions

- Joins and Subqueries

Career Assistance: Resume and LinkedIn profile review, interview preparation, 1:1 career coaching

This post-graduate certification program on artificial intelligence and machine learning will assist you through your career path to building your professional resume and reviewing your Linkedin profile. The program will also conduct mock interviews to boost your confidence and nurture you nailing your professional interviews. The program will also assist you with one-on-one career coaching with industry experts and guide you through a career fair.

Post Graduate Certificate from The University of Texas at Austin and 9 Continuing Education Units (CEUs)

Earn a Postgraduate Certificate in the top-rated Artificial Intelligence and Machine Learning online course from the University of Texas, Austin. The course’s comprehensive Curriculum will foster you into a highly-skilled professional in Artificial Intelligence and Machine Learning. It will help you land a job at the world’s leading corporation and power ahead your career transition.

Hands-on learning & AI training

Build industry-relevant skills with projects guided by experts.

-

1,000+

projects completed

-

22+

domains

-

8

real-world projects

Master in-demand AI & ML tools

Get AI training with 20+ tools to enhance your workflow, optimize models, and build AI solutions

Earn a Professional Certificate in AI & ML

Get a PG certificate from one of the top universities in USA and showcase it to your network

* Image for illustration only. Certificate subject to change.

Meet your faculty

Learn from the top, world-renowned faculty at UT Austin

Interact with our mentors

Interact with dedicated AI and Machine Learning experts who will guide you in your earning and career journey

Get dedicated career support

-

1:1 career sessions

Interact personally with industry professionals to get valuable insights and guidance

-

Interview preparation

Get an insiders perspective to understand what recruiters are looking for

-

Resume & Profile review

Get your resume and LinkedIn profile reviewed by our experts to highlight your AI & ML skills & projects

-

E-portfolio

Build an industry-ready portfolio to showcase your mastery of skills and tools

Course fees

The course fee is USD 4,200

Invest in your career

-

Lead AI innovation by mastering core AI & ML concepts & technologies

-

Build AI applications with GenAI, NLP, computer vision, predictive analytics, and recommendation systems

-

Build an impressive, industry-ready portfolio with hands-on projects.

-

Earn a bonus certificate in Python Foundations to strengthen your skills

-

INSTALLMENT PLANS

Upto 12 months Installment plans

Explore our flexible payment plans

View Plans

-

discount available

USD 4,200 USD 4,000

USD 4,200 USD 4,050

Third Party Credit Facilitators

Check out different payment options with third party credit facility providers

*Subject to third party credit facility provider approval based on applicable regions & eligibility

Admission Process

Admissions close once the required number of participants enroll. Apply early to secure your spot

-

1. Fill application form

Apply by filling a simple online application form.

-

2. Interview Process

A panel from Great Learning will review your application to determine your fit for the program.

-

3. Join program

After a final review, you will receive an offer for a seat in the upcoming cohort of the program.

Course Eligibility

- Applicants should have a Bachelor's degree with a minimum of 50% aggregate marks or equivalent

- For candidates who do not know Python, we offer a free pre-program tutorial

Batch start date

-

Online · To be announced

Admissions Open

Frequently asked questions

Why should I choose this AI and Machine Learning course? What is unique about this AI course from the McCombs School of Business at The University of Texas at Austin?

The benefits of choosing this top-notch program include:

The UT Austin Advantage: The McCombs School of Business at The University of Texas at Austin is a distinguished public research university. They offer world-class education, experiential learning, and cutting-edge research. With a proven track record of delivering high-impact programs through modern teaching methods, you can be confident about learning from top experts.

Industry-Relevant Curriculum: Designed by the faculty and experts from the McCombs School, the comprehensive curriculum covers foundations of AI and ML, Statistics, Machine Learning, Deep Learning & Neural Networks, Computer Vision, and NLP. It focuses on practical business applications and hands-on learning to help you thrive in the fast-growing AI-ML field.

Programming Bootcamp: For learners with no programming background, this program offers an optional programming bootcamp, at no extra cost. The bootcamp prepares you to engage with advanced concepts in the program confidently.

Interactive Sessions: The program provides a chance to connect and network with peers through interactive micro-classes. These sessions deepen your understanding through collaboration and personalized mentor feedback, enhancing your learning and expanding your AI-ML community.

Hands-on Learning: The program’s practical approach enables you to grasp core AI-ML concepts and real-world applications. You’ll take on projects that help you build cutting-edge skills and tackle real business challenges.

Best-in-Class Faculty: Learn from leading academicians and industry experts dedicated to equipping you with practical AI and ML skills.

Industry-Relevant Projects: Complete 8+ hands-on projects across multiple modules during weekend sessions, by applying classroom concepts to real-world problems.

Live Online Mentorship and Webinars: Access live mentoring sessions and webinars with professionals from diverse backgrounds for insights, guidance on industry trends, and project support.

Earn a certificate from UT Austin: After the successful completion of the program, earn a certificate from a world-renowned university.

Flexibility: Gain access to 200+ hours of content online, including lectures, assignments, and live webinars, which you can access anytime, anywhere.

Great Learning Advantage: Receive personalized career support, including tailored guidance, resume and LinkedIn reviews, and mock interview sessions to help you succeed.

Can I pursue this course while working full-time?

Yes, this program is designed for working professionals. Its flexible online format, structured milestones, and dedicated mentor support make it easy to balance learning with your job, so you can upskill at a steady pace without pausing your career.

Will I receive alumni status or university credits?

No, learners who complete the PGP-AIML from the McCombs School of Business at The University of Texas at Austin do not receive alumni status. However, learners would earn 9 Continuing Education Units (CEUs), which reflect the time and effort dedicated to professional learning in this program.

What is the required weekly time commitment?

The program requires about 8-10 hours a week, which includes:

2-3 Hours of recorded lectures

2-hour mentored learning sessions on weekends (hands-on practice & problem-solving)

1 Hour of practice exercises or assessments

2-4 Hours of self-study and practice, based on your background

How will my performance be evaluated in the PGP AIML by UT Austin program?

There will be a continuous evaluation of your performance through quizzes, assignments, case studies, and project reports.

What is the PG Program in AI and Machine Learning about?

The Post Graduate Program in Artificial Intelligence and Machine Learning is offered by the McCombs School of Business at The University of Texas at Austin in collaboration with Great Learning. It is designed to provide a comprehensive and hands-on learning experience to the learners with no prior programming background.

The course begins with foundational concepts in Python and progresses into advanced areas such as Deep Learning, Natural Language Processing, Computer Vision, and Generative AI.

With personalized mentorship, structured milestones, and collaborative peer interaction, learners are supported at every step to ensure consistent progress and meaningful outcomes.

What is the duration of this Texas McCombs AI ML program?

What is the structure of the Artificial Intelligence course?

What career opportunities will I get after completing this Artificial Intelligence course?

Completing this PGP-AIML can help open doors for you to a wide range of roles in the AI and data science space. Depending on your background and experience, you may explore opportunities such as:

AI Engineer

Machine Learning Engineer

Data Scientist

AI Specialist

Computer Vision Engineer

NLP Engineer

Business Analyst

Research Scientist (AI, ML, Deep Learning)

Robotics Scientist

Robotics Engineer

What role does Great Learning play in this AI course?

Great Learning partners with The McCombs School to deliver high-quality AI-ML education and personalized mentorship. Great Learning offers services that include:

- E-Portfolio for Projects: Build a standout portfolio showcasing your skills to employers.

- Resume Creation and Interview Preparation: Get career development support, including resume workshops and mock interviews.

- LinkedIn Profile Review: Receive expert guidance to optimize your professional profile for recruiters.

- Mock Interviews: Practice with industry professionals to sharpen your interview skills.

- 1:1 Career Guidance and Mentorship: Get tailored guidance from AI-ML experts to steer your career in the right direction.

Who are the industry mentors providing guidance throughout the program?

What is the Artificial Intelligence and Machine Learning course from The University of Texas at Austin’s McCombs School of Business?

Discover the power of Artificial Intelligence and Machine Learning at The University of Texas at Austin's McCombs School of Business.

Experience the remarkable capabilities of Artificial Intelligence (AI) and Machine Learning (ML) through the exceptional academic programs offered by The University of Texas at Austin's esteemed McCombs School of Business. This Post Graduate Program is designed to provide learners with essential analytical and practical skills, enabling them to lead organizations in the AI revolution. Taught through a combination of engaging lectures, hands-on demonstrations, live mentored learning, and live webinars, you will learn to apply newly emerging technologies in the workplace effectively.

This PGP in AI-ML at UT Austin includes a comprehensive curriculum empowering learners to master the basics of programming and the most widely used industry-relevant tools and techniques. With a unique approach, you will gain a solid foundation in AI-ML and be well-equipped to tackle real-world challenges.

With access to industry-standard resources and hands-on projects, you will gain practical experience to become an expert in the field through AI training. The Post Graduate Program’s dedicated mentors and career guidance will also support your transition to a lucrative career in Artificial Intelligence and Machine Learning.

What is the ranking of The University of Texas at Austin (UT Austin)?

The Financial Times 2022 placed UT Austin 6th globally for Executive Education - Custom Programs.

What is the curriculum of the McCombs School of Business at the University of Texas at Austin AI and Machine Learning program?

The curriculum of this program covers:

Foundations of AI and ML: Python, NumPy, Pandas, Matplotlib, Seaborn, Exploratory Data Analysis, Statistics.

Machine Learning Concepts: Supervised learning, ensemble techniques, feature engineering, model tuning, unsupervised learning, AI engineering, model deployment.

AI & Deep Learning: Neural networks, TensorFlow, Keras, computer vision, natural language processing, recommendation systems.

What are the learning outcomes of the online AI and Machine Learning course from the McCombs School of Business at The University of Texas at Austin?

By the end of this program, you will:

Gain familiarity with industry-relevant AI and Machine Learning tools and technologies.

Apply AI and ML techniques through hands-on projects that address practical business challenges.

Build expertise in designing solutions using Machine Learning and Deep Learning methods.

Develop skills in key application areas such as Natural Language Processing (NLP) and Computer Vision.

Understand the transformative role of AI across sectors and how it is reshaping modern industries

Build a project-based AI and ML portfolio that demonstrates your capabilities and applied knowledge

Which languages and tools will I learn in this Artificial Intelligence course?

What projects are included in the UT Austin Machine Learning certificate program?

The projects included in this program are designed to build industry relevant skills with expert guidance. Learners will complete 8+ industry-relevant projects, including:

Airplane Passenger Satisfaction Prediction – Marketing

Facebook Comments Prediction – Social Media

West Nile Virus Prediction – Social + Healthcare

Insurance Premium Default Propensity Prediction – Insurance

Retail Sales Prediction – Retail

Loan Customer Identification – Banking

CEO Compensation – HR

Insurance Data Visualization – Insurance

Who are the faculty members teaching this AI course?

What certificate will I receive after completing this AI and Machine Learning certificate course from The McCombs School?

Upon completing the program, you will earn the prestigious Post Graduate Certificate in Artificial Intelligence and Machine Learning: Business Applications from the McCombs School of Business at The University of Texas at Austin.

This certificate validates your mastery of AI-ML skills and enhances your career prospects.

Who is the AI and Machine Learning program ideal for?

This AI and Machine Learning program is ideal for:

Young professionals who want to kickstart their career in the AI domain.

Mid-senior professionals who want to step into senior roles with advanced AI skills .

Project Managers who want to effectively manage AI/ML projects through best practices.

Tech Leaders who want to lead AI innovation with strategic insights and advanced AI/ML skills.

What is the admission process of the AI and Machine Learning course offered by the McCombs School of Business at The University of Texas at Austin?

Fill application form: Apply by filling a simple online application form. Interview Process: A panel from Great Learning will review your application to determine your fit for the program. Join program: After a final review, you will receive an offer for a seat in the upcoming cohort of the program.

When is the application deadline for this course?

Applications are reviewed on a rolling basis until all cohort seats are filled. We recommend applying early to improve your chances and allow ample preparation time.

What are the eligibility criteria for enrolling in this AI and Machine Learning online course offered by the McCombs School of Business at The University of Texas at Austin?

To be eligible for this program, you need to have:

A bachelor’s or undergraduate degree with at least 50% aggregate marks or equivalent.

No prior programming experience

What payment methods are available to pay my course fee?

For assistance, contact aiml.utaustin@mygreatlearning.com or call +1 512-861-6570.

Are there any additional expenses related to buying books, online resources, or license fees?

Does this program accept corporate sponsorships?

Contact us at +1 512-861-6570 for details.

What is the AI/ML course fee to pursue this PG Program?

Why should I take up AI training?

AI and Machine Learning are driving innovations in healthcare, finance, retail and many other sectors. Learning these skills can help you keep up with changes in your field, discover new job opportunities and solve complex problems using data-driven techniques. If you want to work in tech or upskill in your existing job, understanding AI and ML skills can make you more competitive.

How do I know if AI and Machine Learning skills are right for my career path?

If you are interested in data, enjoy resolving complex problems, and are curious about how technology can be used to make better business decisions, AI and Machine Learning courses can be a great fit for you. These skills are in high demand across industries.

Whether you're looking to move into a more technical role or add advanced capabilities to your current profession, learning AI and ML can open new and diverse career opportunities for you.

What are the most popular tools and programming languages used in AI and ML?

The most popular tools and programming languages used in AI and ML include:

Python, the most widely-used language

Jupyter Notebooks and

Google Colab

How is AI being used in emerging technologies like Generative AI and autonomous systems?

Generative AI relies on AI to create original content like writing, images, music, and even code by learning patterns from huge data.

AI allows autonomous systems to understand their surroundings through sensors, make real-time decisions, and navigate without human intervention. For example, self-driving vehicles and drones. Thanks to these advances, transportation, delivery, and manufacturing have become better and a lot more manageable.

What industries are hiring AI and ML professionals the most?

AI and Machine Learning skills are in high demand across many industries like: Technology Healthcare Finance Retail Manufacturing Logistics These industries are looking for professionals who can analyze data, build intelligent systems, and drive innovation.

Delivered in Collaboration with:

McCombs School of Business at The University of Texas at Austin is collaborating with Great Learning to deliver this program in Artificial Intelligence and Machine Learning: Business Applications to learners from around the world. Great Learning is an ed-tech company that has empowered learners from over 14+ countries in achieving positive outcomes for their career growth.

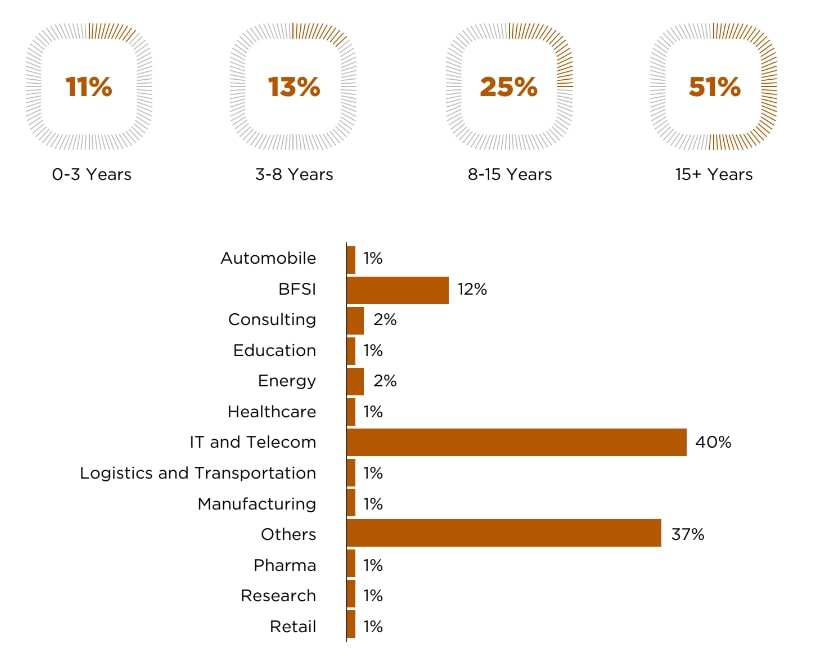

Batch Profile

The PGP-Artificial Intelligence and Machine Learning class represents a diverse mix of work experience, industries, and geographies - guaranteeing a truly global and eclectic learning experience.

The PGP-Artificial Intelligence and Machine Learning class comes from some of the leading organizations.

Speak with our expert

Speak with our expert